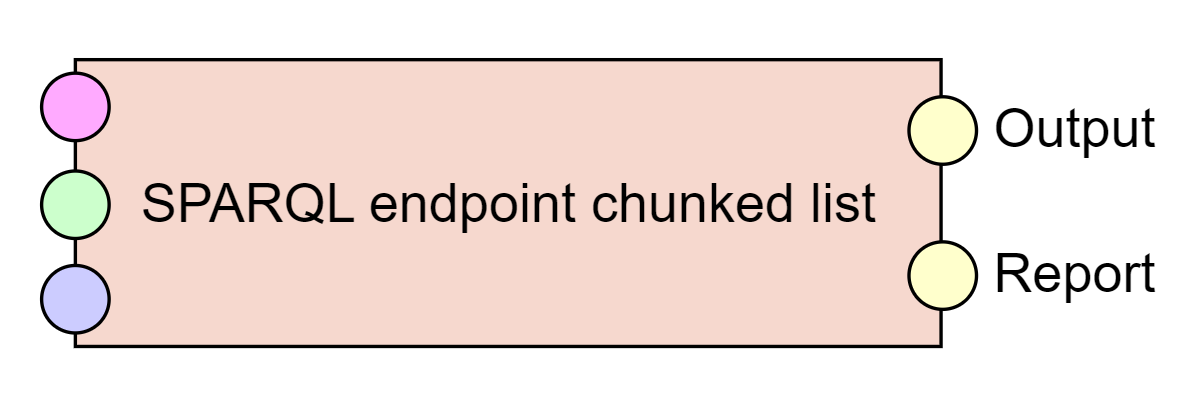

SPARQL endpoint chunked list

Extractor, allows the user to extract RDF triples from a SPARQL endpoint using a series of CONSTRUCT queries. It is especially suitable for querying multiple SPARQL endpoints. It is also suitable for bigger data, as it queries the endpoints for descriptions of a limited number of entities at once, creating a separete RDF data chunk from each query.

- Number of threads to use

- Number of threads to be used for querying in total.

- Query time limit in seconds (-1 for no limit)

- Some SPARQL endpoints may hang on a query for a long time. Sometimes it is therefore desirable to limit the time waiting for an answer so that the whole pipeline execution is not stuck.

- Encode invalid IRIs

- Some SPARQL endpoints such as DBpedia contain invalid IRIs which are sent in results of SPARQL queries. Some libraries like RDF4J then can crash on those IRIs. If this is the case, choose this option to encode such invalid IRIs.

- Fix missing language tags on

rdf:langStringliterals - Some SPARQL endpoints such as DBpedia contain

rdf:langStringliterals without language tags, which is invalid RDF 1.1. Some libraries like RDF4J then can crash on those literals. If this is the case, choose this option to fix this problem by replacingrdf:langStringwithxsd:stringdatatype on such literals.

Characteristics

- ID

- e-sparqlendpointchunkedlist

- Type

- extractor

- Inputs

- RDF single graph - Configuration

- Files - Lists of values in CSV files for each task

- RDF single graph - Tasks

- Outputs

- RDF chunked - Output

- RDF single graph - Report

- Look in pipeline

The SPARQL endpoint chunked list component queries a list of remote SPARQL endpoints using SPARQL CONSTRUCT queries. The typical scenarios include discovery tasks such as determining which classes are used in which endpoints, etc.

On the Tasks input, the component expects a list of tasks specifying endpoints and queries. This chunked version of the component is suitable for bigger data, which needs to be queried by parts. The typical use case is getting descriptions of a larger number of entities of the same type, which would be too big to get in one query.

On the Files input, the component expects CSV files containing columns with headers.

The column headers are the names of variables, which will be used in the SPARQL queries.

The rows then contain the list of values assigned to the variables.

The list is split into pieces determined by the Chunk size parameter of the task specification and each piece is inserted into the query using a VALUES clause in place of the ${VALUES} placeholder, forming one RDF data chunk on the output.

The input list of values can be created either manually, or using the SPARQL endpoint select, SPARQL select multiple or SPARQL endpoint select scrollable cursor components.

The Output contains the collected results as chunks, where one Task produces multiple chunks according to the runtime configuration and the corresponding input CSV file. The Report output contains potential error messages encountered when querying the SPARQL endpoints.

Tasks specification

Below you can see sample task specification for the component. This task queries for a list of resources for specific classes in a given endpoint. The list of class IRIs is given in the specified CSV file.

@prefix sel: <http://plugins.linkedpipes.com/ontology/e-sparqlEndpointChunkedList#> .

<urn:uuid:0b6d0abb-5040-4511-8a05-f73d95bff5fc> a sel:Task;

sel:chunkSize "1";

sel:endpoint "http://sparql.reegle.info/";

sel:fileName "http___sparql_reegle_info_.csv";

sel:query """

PREFIX adhoc: <http://linked.opendata.cz/ontology/adhoc/>

CONSTRUCT {

?subj adhoc:resource ?resource ;

adhoc:class ?Class ;

adhoc:endpointUri \"http://sparql.reegle.info/\" .

} WHERE {

{

SELECT ?resource ?Class

WHERE {

${VALUES}

?resource a ?Class .

}

LIMIT 5

}

BIND(UUID() as ?subj)

}

""" .The provided CSV file looks like this (only the Class column/variable is used in the query):

Class,instances

http://xmlns.com/foaf/0.1/Document,1759

http://reegle.info/schema#ProjectOutput,284

http://www.geonames.org/ontology#Feature,250

http://reegle.info/schema#Project,198

http://reegle.info/schema#CountryProfile,191

http://www.w3.org/2002/07/owl#DatatypeProperty,62

http://www.w3.org/1999/02/22-rdf-syntax-ns#Property,57

http://reegle.info/schema#Specialisation,37

http://reegle.info/schema#Sector,36

http://reegle.info/schema#Technology,26

http://www.w3.org/2004/02/skos/core#Concept,15

http://www.w3.org/2002/07/owl#Class,12

http://www.w3.org/2002/07/owl#ObjectProperty,10

http://www.w3.org/2000/01/rdf-schema#Class,9

http://www.w3.org/2002/07/owl#AnnotationProperty,2

http://www.w3.org/2002/07/owl#Ontology,2

http://www.w3.org/2002/07/owl#Thing,2