Load to Wikibase

Loader, allows the user to load RDF data using the Wikibase RDF Dump Format to a Wikibase instance. There is a whole tutorial on Loading data to Wikibase available.

- Wikibase API Endpoint URL (api.php)

-

This is the URL of the

api.phpin the target Wikibase instance. For examplehttps://www.wikidata.org/w/api.php - Wikibase Query Service SPARQL Endpoint URL

-

This is the URL of the SPARQL Endpoint of the Wikibase Query Service containing data from the target Wikibase instance.

For example

https://query.wikidata.org/sparql - Any existing property from the target Wikibase instance

- e.g. P1921 for Wikidata. This is necessary, so that the used library is able to determine data types of properties used in the Wikibase.

- Wikibase ontology IRI prefix

-

This is the common part of the IRI prefixes used by the target Wikibase instance.

For example

http://www.wikidata.org/. This can be determined by looking at an RDF representation of the Q1 entity in Wikidata, specifically thewd:and similar prefixes. - User name

- User name for the Wikibase instance.

- Password

- Password for the Wikibase instance.

- Average time per edit in ms

- This is used by the wrapped Wikidata Toolkit to pace the API calls.

- Use strict matching (string-based) for value matching

-

Wikibase distinguishes among various string representations of the same number, e.g.

1and01, whereas in RDF, all those representations are considered equivalent and interchangable. When enabled, the textual representations are considered different, which may lead to unnecessary duplicates. - Skip on error

- When a Wikibase API call fails and this is enabled, the component continues its execution. Errors are logged in the Report output.

- Retry count

-

Number of retries in case of

IOException, i.e. not for all errors. - Retry pause

- Time between individual requests in case of failure.

- Create item message

- MediaWiki edit summary message when creating items.

- Update item message

- MediaWiki edit summary message when updating items.

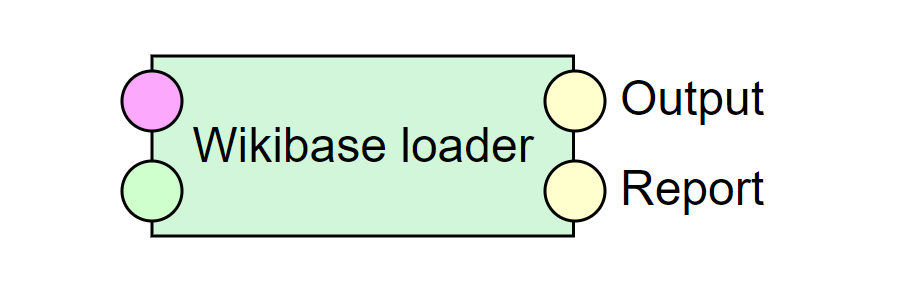

Characteristics

- ID

- l-wikibase

- Type

- loader

- Inputs

- RDF single graph - Configuration

- RDF single graph - Input

- Outputs

- RDF single graph - Output mapping

- RDF single graph - Report

- Look in pipeline

The Load to Wikibase component takes the input RDF data using the Wikibase RDF Dump Format and loads it to a specified Wikibase instance.

This component was developed thanks to the Wikimedia Foundation Project Grant Wikidata & ETL.

The component currently does NOT work with: Ranks, Lexemes, Redirects and Sitelinks.

Shape of the input data

Simply put, the component input represents the desired state of the data in the Wikibase instance.

Specifically, this means that the IRIs of Items and Statements on the input need to match those in their RDF representations in the target Wikibase, if they are to be updated.

The expected format can be seen by using the Special:EntityData function on the Item to be updated in the target Wikibase, e.g. Q1 in RDF.

There, we may see data like this:

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix wd: <http://www.wikidata.org/entity/> .

wd:Q1 rdfs:label "Univers"@fr, "universe"@en-gb, "vesmír"@cs,

"فضاء كوني"@ar, "گەردوون"@ckb, "Çут Тĕнче"@cv,

"σύμπαν"@el, "گیتی"@fa, "બ્રહ્માંડ"@gu, ...Note that by default, the RDF format is missing statements about types of things such as:

@prefix wd: <http://www.wikidata.org/entity/> .

@prefix wikibase: <http://wikiba.se/ontology#> .

wd:Q1 a wikibase:Item .These are, however, required on the input of the component, so that the loader can distinguish an Item from a Statement and other things which may be present in the input.

Creating new Items

Next is the question of how the IRIs of items to be created in the target Wikibase should look like, when they do not have their Q number assigned yet.

The answer is that they can by any IRI (not a blank node), as long as they are tagged by the loader:New class, like this:

@prefix schema: <http://schema.org/> .

@prefix wd: <http://www.wikidata.org/entity/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

@prefix wikibase: <http://wikiba.se/ontology#> .

<urn:MyNewItem> a wikibase:Item, loader:New ;

schema:name "My new Item to be created"@en .

The resulting mapping of the newly created Items is given by the component as a linkset using the loader:mappedTo predicate on the output, like this:

@prefix wd: <http://www.wikidata.org/entity/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

<urn:MyNewItem> loader:mappedTo wd:Q9999999999 .Working with labels

To specify a label for an item, schema:name needs to be used on the component input.

Note that in the RDF representations of Items coming directly from Wikibase, e.g. Q1 in RDF, schema:name, rdfs:label and skos:prefLabel are used.

However, only rdfs:label is then present in the Wikibase SPARQL Endpoint.

Besides labels, there are descriptions (schema:description) and aliases (skos:altLabel).

Note that there is a constraint in Wikidata, that no 2 Items can share the same pair of label and description in any given language.

Working with Statements

The same logic can be used to work with Statements.

To update an existing Statement, its IRI needs to be used in the input data.

To create a new statement, the IRI can be arbitrary, as long as the Statement instance is tagged by the loader:New class.

In the following data snippet, we want to make sure, that in our Wikibase instance (not Wikidata, note the prefixes), the item Q2193 has the label Test item in English, and that the existing statement using property P17 points to property P16.

@prefix schema: <http://schema.org/> .

@prefix wd: <https://wikibase.opendata.cz/entity/> .

@prefix p: <https://wikibase.opendata.cz/prop/> .

@prefix ps: <https://wikibase.opendata.cz/prop/statement/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

wd:Q2193 a wikibase:Item ;

schema:name "Test item"@en ;

p:P17 wds:Q2193-4ceab9fd-45b0-c7db-176f-15e4b0b6cb03 .

wds:Q2193-4ceab9fd-45b0-c7db-176f-15e4b0b6cb03 a wikibase:Statement;

ps:P17 wd:P16 .

The following snippet shows the creation of a new statement using property P16 pointing to https://google.com.

@prefix schema: <http://schema.org/> .

@prefix wd: <https://wikibase.opendata.cz/entity/> .

@prefix p: <https://wikibase.opendata.cz/prop/> .

@prefix ps: <https://wikibase.opendata.cz/prop/statement/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

wd:Q2192 a wikibase:Item ;

rdfs:label "URL property test item"@en ;

p:P16 <urn:1234> .

<urn:1234> a loader:New ;

ps:P16 <https://google.com> .Working with complex data types and qualifiers

Wherever one needs to use a complex data type such as wikibase:GlobecoordinateValue, wikibase:TimeValue or wikibase:QuantityValue, only the full representation of that value is supported.

Note that values, references and qualifiers do not have their own identity and their IRI is based on their hash value.

Therefore, it does not matter which IRIs are used for those.

The following snippet shows update of a statement with a P12 for wikibase:GlobecoordinateValue and a qualifier using P8 for a wikibase:QuantityValue.

@prefix schema: <http://schema.org/> .

@prefix wd: <https://wikibase.opendata.cz/entity/> .

@prefix p: <https://wikibase.opendata.cz/prop/> .

@prefix ps: <https://wikibase.opendata.cz/prop/statement/> .

@prefix psv: <https://wikibase.opendata.cz/prop/statement/value/> .

@prefix pqv: <https://wikibase.opendata.cz/prop/qualifier/value/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

wd:Q2181 a wikibase:Item ;

rdfs:label "qualifierTest item"@en ;

p:P12 wds:Q2181-4B493D40-A742-4791-8EAE-60E6D0B73501 .

wds:Q2181-4B493D40-A742-4791-8EAE-60E6D0B73501 a wikibase:Statement ;

psv:P12 <urn:value1> ;

pqv:P8 <urn:valueQ> .

<urn:value1> a wikibase:GlobecoordinateValue ;

wikibase:geoLatitude "28"^^xsd:double ;

wikibase:geoLongitude "20"^^xsd:double ;

wikibase:geoPrecision "0.000277778"^^xsd:double ;

wikibase:geoGlobe <http://www.wikidata.org/entity/Q2> .

<urn:valueQ> a wikibase:QuantityValue ;

wikibase:quantityAmount "+4"^^xsd:decimal ;

wikibase:quantityUpperBound "5"^^xsd:decimal ;

wikibase:quantityLowerBound "2"^^xsd:decimal ;

wikibase:quantityUnit <https://wikibase.opendata.cz/entity/Q2153> .Deleting statements

It is also possible to delete existing statements, as in the snippet below:

@prefix schema: <http://schema.org/> .

@prefix wd: <https://wikibase.opendata.cz/entity/> .

@prefix p: <https://wikibase.opendata.cz/prop/> .

@prefix ps: <https://wikibase.opendata.cz/prop/statement/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

wd:Q2212 a wikibase:Item ;

p:P19 wds:Q2212-dc589db5-481a-e6e5-f742-2032c2de7fbd .

wds:Q2212-dc589db5-481a-e6e5-f742-2032c2de7fbd a wikibase:Statement, lpetl:Delete .Merging and replacing references

The default strategy for existing statements is to merge existing references with the references specified in the input data. Alternatively, references of the existing statement can be replaced by what is specified in the data, like in the snippet below:

@prefix schema: <http://schema.org/> .

@prefix wd: <https://wikibase.opendata.cz/entity/> .

@prefix p: <https://wikibase.opendata.cz/prop/> .

@prefix ps: <https://wikibase.opendata.cz/prop/statement/> .

@prefix loader: <http://plugins.linkedpipes.com/ontology/l-wikibase#> .

wd:Q2212 a wikibase:Item ;

p:P19 wds:Q2212-dc589db5-481a-e6e5-f742-2032c2de7fbd .

wds:Q2212-dc589db5-481a-e6e5-f742-2032c2de7fbd a wikibase:Statement, lpetl:Replace ;

ps:P19 wd:Q2211 ;

prov:wasDerivedFrom <urn:ref2> .

<urn:ref2> a wikibase:Reference ;

pr:P16 <https://data.gov.cz> .