How to test data

You want to test that data processed in a pipeline meets specific requirements.

Problem

Many pipelines embody assumptions about the data they operate on. In order for such pipelines to execute successfully, the data they work with must fulfil the assumptions. Unfortunately, these assumptions are often not stated explicitly, so that the pipelines tend to fail unexpectedly when they encounter data that violates their assumptions. There are many cases in which this problem appears: when pipelines receive arbitrary input from their users, the data they work with changes over time, or when edits during a pipeline development have undesired side effects.

Solution

LinkedPipes ETL (LP-ETL) enables to make the assumptions behind a pipeline explicit and automatically testable. The SPARQL ask component allows you to stop a pipeline's execution based on the result of a given SPARQL ASK query. A pipeline's assumptions can be formulated as ASK queries and tested automatically via the SPARQL ask component.

The component falls into a special category of components that test data quality. Unlike extractors, transformers, and loaders, it takes input data and, instead of producing output data, it effects the execution of the pipeline that contains it. Such pipeline will either terminate or continue when the component is run. The component's option Fail on ASK success determines what effect the component's query result has. Execution of the component's pipeline is terminated either when its query returns the boolean value true and the mentioned option is switched on, or when the query result is false and the option is switched off. Otherwise, the pipeline execution continues. Being able to configure how is the query result interpreted allows you write simpler ASK queries. For instance, consider writing a query that tests if some data matches a required graph pattern and an inverse query testing that no data matches a prohibited pattern. For example, to test if some data is not empty, you can use the this simple query:

ASK { [] ?p [] . }When a pipeline execution fails, you can go to its debug view and see if it was terminated by the SPARQL ask component. In such case, the component will be outlined in red and it would display the error Ask assertion failure upon clicking on it.

There are several typical scenarios in which this component is used. When you develop a pipeline fragment that requires its users to provide it with input data, the component can test if the provided data meets the requirements of the fragment. For example, besides testing a non-empty input, a pipeline fragment may require its input to be described in terms of a specific RDF vocabulary. In such case, you can describe the required data shape by a graph pattern and let the SPARQL ask component check whether the input data matches the pattern.

There are more cases in which a pipeline's input may vary. For instance, a pipeline can be run periodically, while the data it processes changes over time. If you want to ensure that the assumptions about the input with which a pipeline operates are met, you can employ the SPARQL ask component to test the pipeline's intermediate data. Likewise, the tests executed by this component can also serve as guard rails during pipeline development, helping you to detect unwanted effects of changes you make in the pipeline.

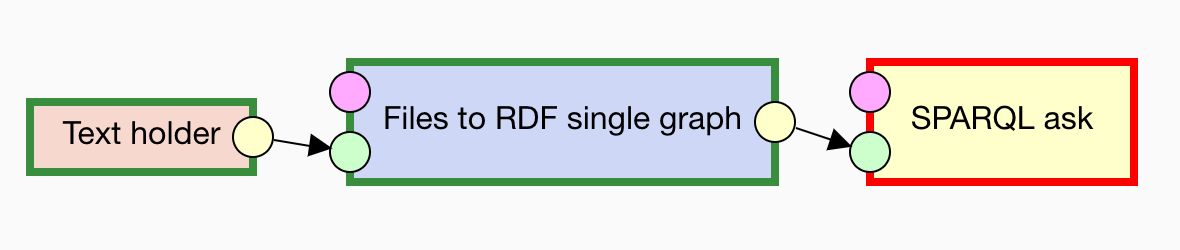

Here you can find a simple pipeline that tests if its input is not empty.

Discussion

In order to ensure that LP-ETL does not execute a component unless a condition tested by the SPARQL ask component is met, you can connect the SPARQL ask to the component via a Run before edge. This edge instructs LP-ETL to run the connected components only after the SPARQL ask component has run. In effect, either the tested condition is satisfied and the connected component is run, or else the pipeline's execution is terminated. For example, this approach is useful for preventing needless execution of components performing expensive or long-running operations.

If you want to test more sophisticated requirements, then knowing when data fails to meet them is insufficient, because you need more information to diagnose the problem and solve it. In such case you can formulate a SPARQL CONSTRUCT query that tests the given requirement but also collects and outputs data describing the violations of the requirement. For example, if you require that birth dates of all people precede their death dates in your dataset, you can express such requirement via the following query:

PREFIX dbo: <http://dbpedia.org/ontology/>

PREFIX foaf: <http://xmlns.com/foaf/0.1/>

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX spin: <http://spinrdf.org/spin#>

CONSTRUCT {

[] a spin:ConstrainViolation ;

rdfs:label "Person's birth date follows the death date"@en ;

spin:violationRoot ?person ;

spin:violationPath dbo:birthDate, dbo:deathDate ;

spin:violationValue ?birthDate, ?deathDate .

}

WHERE {

?person a foaf:Person ;

dbo:birthDate ?birthDate ;

dbo:deathDate ?deathDate .

FILTER (?birthDate > ?deathDate)

}

In this query, violations of the tested requirement are described with the terms of the SPIN RDF vocabulary. Their descriptions feature labels indicating the violated requirement, as well as links to resources involved in the violation, and values causing it. For example, if you run this query on DBpedia, you will see that it currently fails this assumption.

In LP-ETL, you can execute CONSTRUCT queries by the SPARQL construct component. You can pipe the results of this component into the SPARQL ask component and assert that they are empty by switching on the Fail on ASK success option and filling in the already mentioned query:

ASK { [] ?p [] . }If the described requirement is not satisfied, then the pipeline's execution terminates. If that happens, you can go to the debug view of the failed execution and see the output of the CONSTRUCT query to find out what caused the failure.

See also

While SPARQL offers expressive means to formulate a variety of requirements for data, there are other tools made directly for the job of testing data. The standard way of testing whether data conforms to the assumed structure is the Shapes Constraint Language, which provides greater capabilities for RDF data validation than vanilla SPARQL.

If you are after conformance with RDF vocabularies describing the processed data, then you can try RDFUnit, which allows to generate integrity constraints automatically from RDF vocabularies.