ETL: Extract Transform Load for Linked Data

What's new

-

whatshot2022-09-04: LinkedPipes ETL to be used in the Slovak National Open Data Catalog

LinkedPipes ETL will be used as a core technology in the updated version of the Slovak National Open Data Catalog, inspired by how it is already in use in the Czech National Open Data Catalog.

-

whatshot2020-10-30: LinkedPipes ETL to be used in EU CEF Telecom project STIRData

LinkedPipes ETL will be used as a core technology in the just started EU CEF Telecom project STIRData to promote open data interoperability through Linked Data and standardization!

-

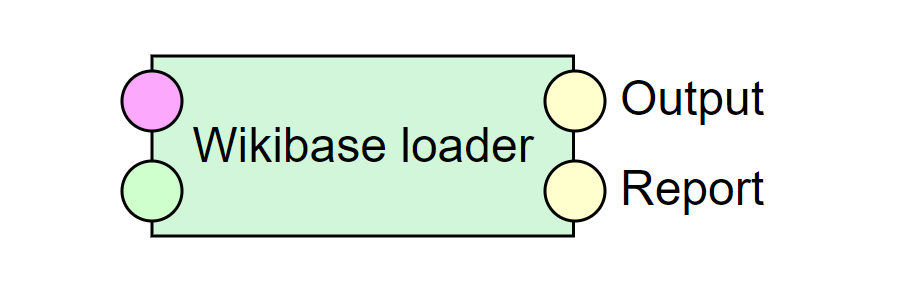

whatshot2019-11-15: New tutorial about loading to Wikibase available!

-

whatshot2019-07-12: LinkedPipes ETL featured @ Wikimania 2019!

LinkedPipes ETL will be featured as a solution for repeatable loading of data into Wikibases and Wikidata at Wikimania 2019! See you in August in Stockholm, Sweden!

-

whatshot2018-07-04: LinkedPipes ETL featured @ ISWC 2018 Demo Session!

LinkedPipes ETL will be featured as part of the LinkedPipes DCAT-AP Viewer demo at ISWC 2018! See you in October in Monterey, California, USA!

3-minute screencast

Features

view_module

Modular design

Deploy only those components that you actually need. For example, on your pipeline development machine, you need the whole stack, but on your data processing server, you only need the backend part. The data processing options are extensible by components. We have a library of the most basic ones. When you need something special, just copy and modify an existing one.

http

All functionality covered by REST APIs

Our frontend uses the same APIs which is available to everyone. This means that you can build your own frontend, integrate only parts of our app and control everything easily.

share

Almost everything is RDF

Except for our configuration file, everything is in RDF. This includes the ETL pipelines, component configurations and messages indicating the progress of the pipeline. You can generate the pipelines and configurations using SPARQL from your own app. Also, batch modification of configurations is a simple text file operation, no more clicking through every pipeline when migrating.

Commercial support

Commercial support is available through CUIT s.r.o., a spin-off company of the Charles University.