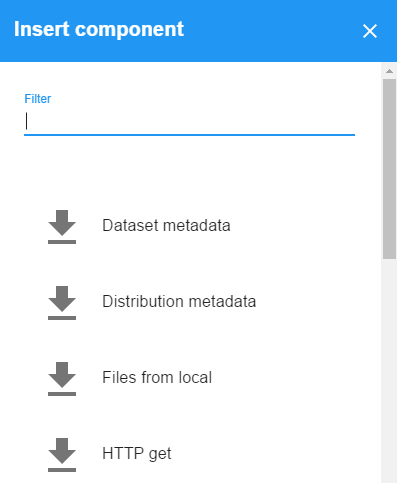

Components

A key building block of a pipeline is a component. There is a library of components ready and here we go through their configuration. Of course, everyone can add their own component, the best way to do that is to copy&paste one of ours and change it. There are five basic types of components. Extractors bring data from outside to the ETL pipeline, e.g. by downloading a file from the Web. Transformers perform transformation of data already in the pipeline and the result is kept in the pipeline. Quallity Assessment components verify that data in the pipeline meet defined criteria. Loaders take data produced by the pipeline and place it outside, e.g. upload it to a web server or database. Special purpose components may do something else, e.g. run an external process.

Component types

The icons next to the components in the component list represent their type. Currently, we have five types of components.

-

Extractors

Bring data from the outside into the pipeline

-

Transformers

Take data from the pipeline, change it and pass it on

-

Quality Assessment

Check data passing through the pipeline and stop execution in case of problems

-

Loaders

Take data from the pipeline and load it to an external place

-

Special

They do something else, e.g. execute a remote command

List of available components

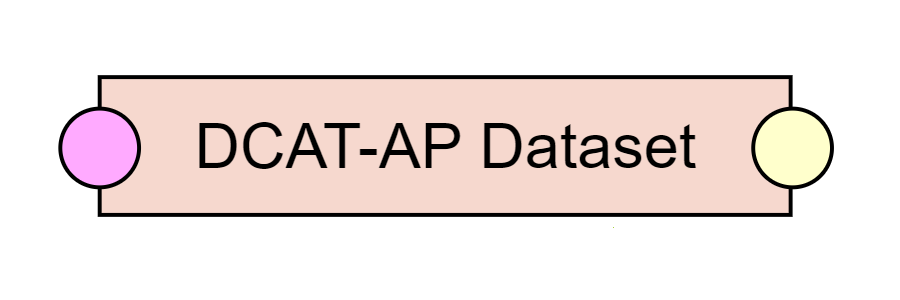

Extractor, provides a form to fill in DCAT-AP v1.1 Dataset metadata.

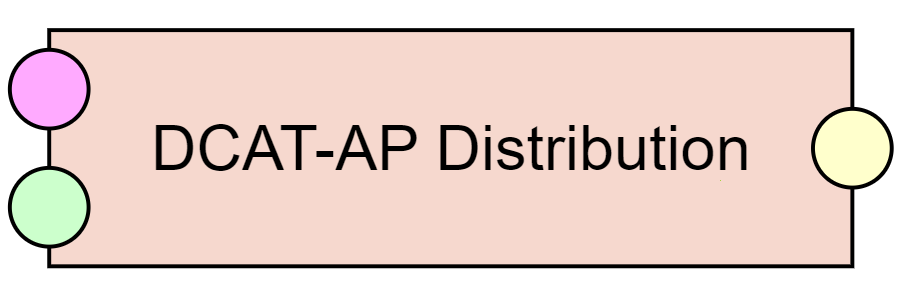

Extractor, provides a form to fill in DCAT-AP v1.1 Distribution metadata.

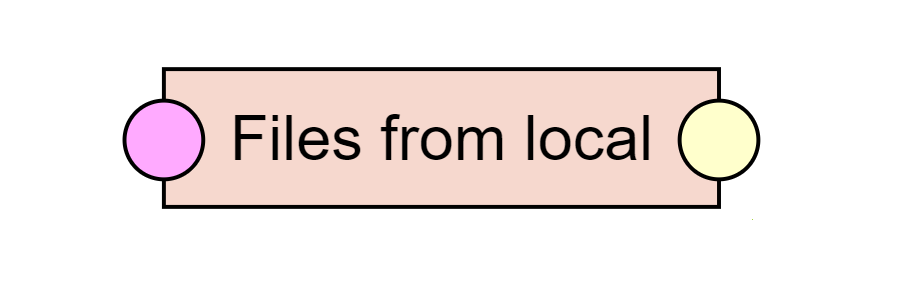

Extractor, allows files in local file system to be used in the pipeline.

- Path to a local file or directory

- Copies a file or directory on a local file system to be accessible by the pipeline

Extractor, allows the user to download a file from the web using FTP.

- Use passive mode

- Determines whether the component should use the passive mode

Extractor, allows the user to download a file from the web.

- File URL

- URL from which the file should be downloaded

- File name

- File name under which the file will be visible in the pipeline

- Force to follow redirects

- The component will follow HTTP 3XX redirects

- User agent

- The user agent string that should be present in the HTTP get headers. Defaults to the default Java user agent string.

Extractor, allows the user to download a list of files from the web.

- Number of threads used for download

- This component supports parallel download using multiple threads. This property sets the thread pool size.

- Force to follow redirects

- The component will follow HTTP 3XX redirects

- Skip on errors

- When enabled and one of the files in the list fails to download, the component skips it and downloads the rest of the list instead of failing

Extractor, allows the user to pass files from an HTTP POST pipeline execution request.

Extractor, allows the user to extract RDF triples from a SPARQL endpoint using a CONSTRUCT query.

- Endpoint

- URL of the SPARQL endpoint to be queried

- MIME type

- MIME type to be used in the Accept header of the HTTP request made by RDF4J to the remote endpoint

- SPARQL CONSTRUCT query

- Query for extraction of triples from the endpoint

Extracts RDF triples from a SPARQL endpoint based on a list of entities to extract. Suitable for bigger data.

Extractor, allows the user to extract RDF triples from a SPARQL endpoint using a series of CONSTRUCT queries based on an input list of entity IRIs. It is suitable for bigger data, as it queries the endpoint for descriptions of a limited number of entities at once, creating a separete RDF data chunk from each query.

- Endpoint URL

- URL of the SPARQL endpoint to be queried

- MimeType

- Some SPARQL endpoints (e.g. DBpedia) return invalid RDF data with the default MIME type and it is necessary to specify another one here. This is sent in the SPARQL query HTTP request

Acceptheader. - Chunk size

- Number of entities to be queried for at once. This is done by using the VALUES clause in the SPARQL query, so this number corresponds to the number of items in the VALUES clause.

- Default graph

- IRIs of the graphs to be passed as default graphs for the SPARQL query

- SPARQL CONSTRUCT query with ${VALUES} placeholder

- Query for extraction of triples from the endpoint. At the end of its WHERE clause, it should have the

${VALUES}placeholder. This is where the VALUES clause listing the current entities to query is inserted.

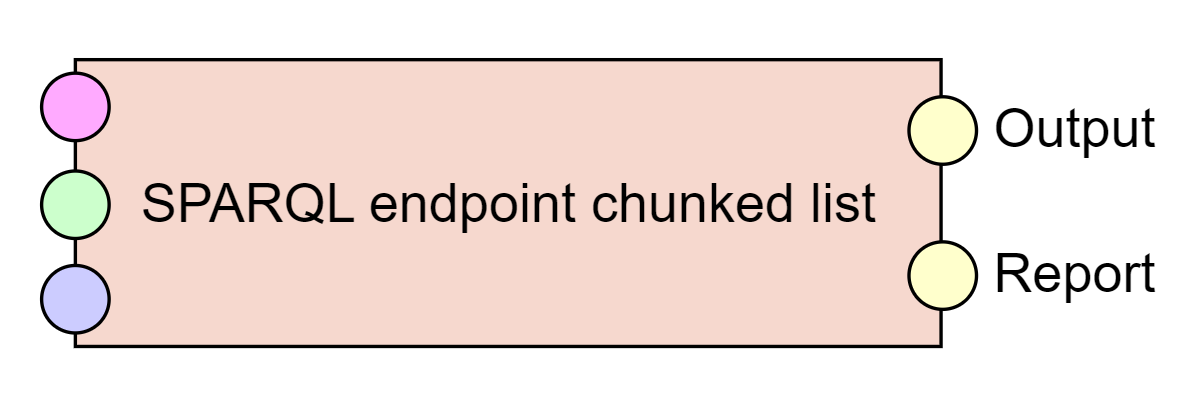

Extracts RDF triples from SPARQL endpoints based on a list of endpoints and queries to execute, and a list of entities to query for.

Extractor, allows the user to extract RDF triples from a SPARQL endpoint using a series of CONSTRUCT queries. It is especially suitable for querying multiple SPARQL endpoints. It is also suitable for bigger data, as it queries the endpoints for descriptions of a limited number of entities at once, creating a separete RDF data chunk from each query.

- Number of threads to use

- Number of threads to be used for querying in total.

- Query time limit in seconds (-1 for no limit)

- Some SPARQL endpoints may hang on a query for a long time. Sometimes it is therefore desirable to limit the time waiting for an answer so that the whole pipeline execution is not stuck.

- Encode invalid IRIs

- Some SPARQL endpoints such as DBpedia contain invalid IRIs which are sent in results of SPARQL queries. Some libraries like RDF4J then can crash on those IRIs. If this is the case, choose this option to encode such invalid IRIs.

- Fix missing language tags on

rdf:langStringliterals - Some SPARQL endpoints such as DBpedia contain

rdf:langStringliterals without language tags, which is invalid RDF 1.1. Some libraries like RDF4J then can crash on those literals. If this is the case, choose this option to fix this problem by replacingrdf:langStringwithxsd:stringdatatype on such literals.

Extracts RDF triples from a SPARQL endpoint according to a SPARQL CONSTRUCT query, using the scrollable cursor technique for OpenLink Virtuoso.

Extractor, allows the user to extract RDF triples from a SPARQL endpoint using a CONSTRUCT query, using the scrollable cursor technique for OpenLink Virtuoso.

- Endpoint URL

- URL of the SPARQL endpoint to be queried

- Page size

- Specifies the size of one page to be returned

- Default graph

- IRIs of default graphs used for the SPARQL query

- Query prefixes

- Prefixes to be defined for the scrollable cursor query

- Outer construct clause

- The CONSTRUCT clause of the outer paging query. It should use a subset of the variables returned by the SELECT clause of the inner query

- Inner select query

- Ordered SELECT query to be used as a nested query in the outer paging query

Extracts RDF triples from SPARQL endpoints based on a list of endpoints and queries to execute.

Extractor, allows the user to extract RDF triples from a SPARQL endpoint using a series of CONSTRUCT queries. It is especially suitable for querying multiple SPARQL endpoints.

- Number of threads to use

- Number of threads to be used for querying in total.

- Query time limit in seconds (-1 for no limit)

- Some SPARQL endpoints may hang on a query for a long time. Sometimes it is therefore desirable to limit the time waiting for an answer so that the whole pipeline execution is not stuck.

- Encode invalid IRIs

- Some SPARQL endpoints such as DBpedia contain invalid IRIs which are sent in results of SPARQL queries. Some libraries like RDF4J then can crash on those IRIs. If this is the case, choose this option to encode such invalid IRIs.

- Fix missing language tags on

rdf:langStringliterals - Some SPARQL endpoints such as DBpedia contain

rdf:langStringliterals without language tags, which is invalid RDF 1.1. Some libraries like RDF4J then can crash on those literals. If this is the case, choose this option to fix this problem by replacingrdf:langStringwithxsd:stringdatatype on such literals. - Limit number of tasks running in parallel in a group (0 for no limit)

- To avoid overloading a single SPARQL endpoint, set a maximum number of tasks running in parallel for a group. Then, place all tasks targeting a single endpoint to a single group. Tasks in different groups will still run in parallel in the number of threads specified.

- Query commit size (0 to commit all triples at once)

- This is used to control the number of triples committed to a repository at once. Limiting the number may be necessary for large query results.

Extracts a CSV table from a SPARQL endpoint according to a SPARQL SELECT query

Extractor, allows the user to extract a CSV table from a SPARQL endpoint using a SELECT query.

- Endpoint URL

- URL of the SPARQL endpoint to be queried

- File name

- File name of the resulting CSV table

- Default graph

- IRIs of default graphs used for the SPARQL query

- SPARQL SELECT query

- Query for extraction of CSV from the endpoint

Extracts a CSV table from a SPARQL endpoint according to a SPARQL SELECT query, using the scrollable cursor technique for OpenLink Virtuoso.

Extractor, allows the user to extract a CSV table from a SPARQL endpoint using a SELECT query, using the scrollable cursor technique for OpenLink Virtuoso.

- Endpoint URL

- URL of the SPARQL endpoint to be queried

- File name

- File name of the resulting CSV table

- Page size

- Specifies the size of one page to be returned

- Default graph

- IRIs of default graphs used for the SPARQL query

- Query prefixes

- Prefixes to be defined for the scrollable cursor query

- Outer select clause

- The SELECT clause of the outer paging query. It should be a subset of the SELECT clause of the inner query

- Inner select query

- Ordered SELECT query to be used as a nested query in the outer paging query

Extractor, allows the user to input any text as a file into the pipeline.

- Output file name

- File name under which the file will be visible in the pipeline

- File content

- Text content of the file

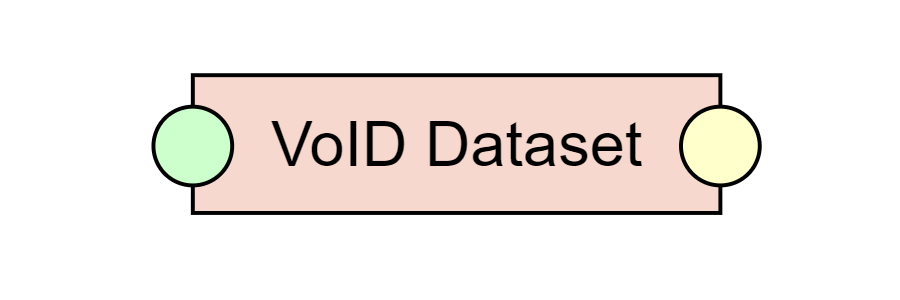

Extractor, provides a form to fill in VoID Dataset metadata properties.

- Get distribution IRI from input

- Gets distribution IRI from input, output of DCAT-AP Distribution is expected.

- Distribution IRI

- When used independently, distribution IRI can be set here.

- Example resource URLs

- URLs of example resources.

- SPARQL Endpoint URL

- URL of a SPARQL endpoint through which the data is accessible.

- Copy download URLs to

void:dataDump - In DCAT, the link to download a file is

dcat:downloadURL. This copies it also tovoid:dataDumpfor VoID enabled applications.

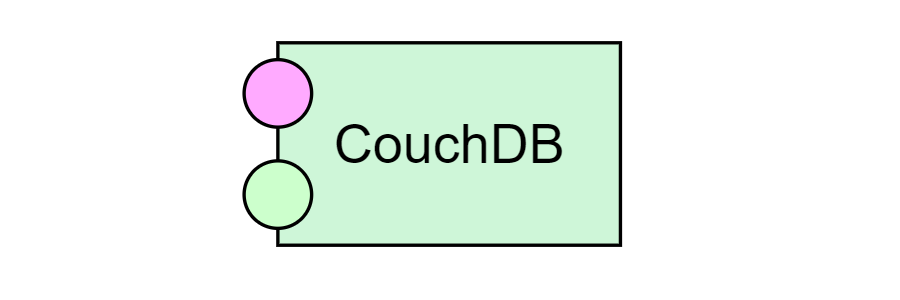

Loader, allows the user to to load documents into Apache CouchDB.

- CouchDB server URL

- URL of the CouchDB server, e.g.

http://127.0.0.1:5984 - Database name

- Name of the CouchDB database to write to

- Clear database (delete and create)

- Determines whether the CouchDB database is first deleted an recreated before loading

- Maximum batch size in MB

- The loader can split the load into batches of the specified size

- Use authentication

- Use for CouchDB requiring user authentication

- User name

- User name for CouchDB

- Password

- Password for CouchDB

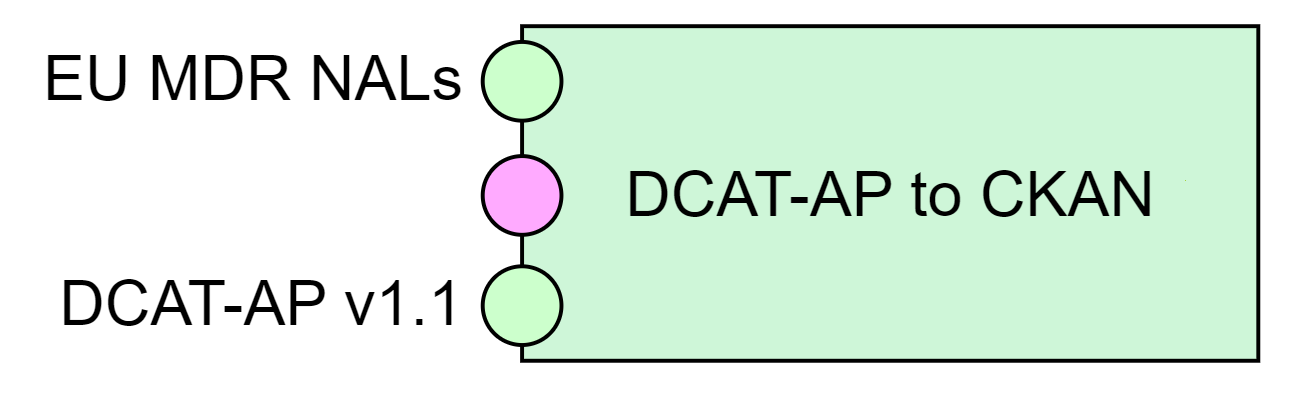

Loader, loads DCAT-AP v1.1 metadata to a CKAN catalog.

- CKAN Action API URL

- URL of the CKAN API of the target catalog, e.g.

https://linked.opendata.cz/api/3/action - CKAN API Key

- The API key allowing write access to the CKAN catalog. This can be found on the user detail page in CKAN.

- CKAN dataset ID

- The CKAN dataset ID to be used for the current dataset

- Load language RDF language tag

- Since DCAT-AP supports multilinguality and CKAN does not, this specifies the RDF language tag of the language, in which the metadata should be loaded.

- Profile

- CKAN can be extended with additional metadata fields for datasets (packages) and distributions (resources). If the target CKAN is not extended, choose

Pure CKAN. - Generate CKAN resources from VoID example resources

- If the input metadata contains

void:exampleResource, create a CKAN resource from it. - Generate CKAN resource from VoID SPARQL endpoint

- If the input metadata contains

void:sparqlEndpoint, create a CKAN resource from it.

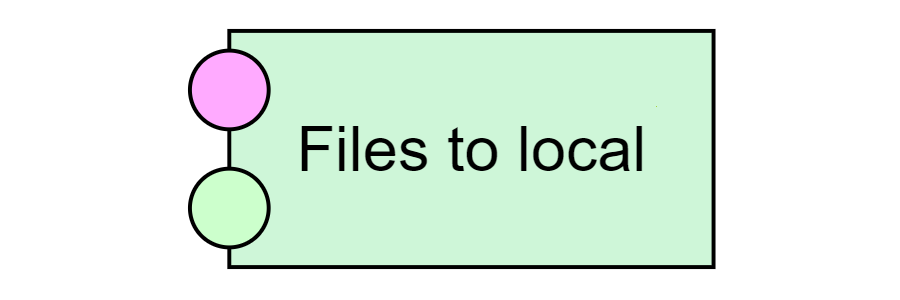

Loader, allows files from the pipeline to be stored on a local file system.

- Target path

- Copies a file or directory to a local file system from the pipeline

- Permissions to set to files

- Linux file system permissions in text form to be set to output files, e.g.

rwxrwxr-- - Permissions to set to directories

- Linux file system permissions in text form to be set to output directoriess, e.g.

rwxrwxr-x

Loader, allows the user to transfer a file to a server via the SCP protocol.

- Host address

- Address of the target server for the file upload. E.g.

obeu.vse.cz - Port number

- SSH port to use. Typically

22 - User name

- User name for the server

- Password

- Password for the server. Note that this is plain text and not secure in any way.

- Target directory

- Directory on the server where the file should be uploaded. E.g.

~/upload/ - Create the target directory

- When checked, tries to create the target directory, when it is not present on the server. Fails otherwise.

- Clear target directory

- When checked, empties the target directory before loading input files.

Loader, allows the user to transfer a file to a server via the FTP protocol.

- Host address

- Address of the target server for the file upload. E.g.

myftp.com - Port number

- FTP port to use. Typically

21. - User name

- User name for the server

- Password

- Password for the server. Note that this is plain text and not secure in any way.

- Target directory

- Directory on the server where the file should be uploaded. E.g.

~/upload/ - Retry count

- Number of retries in cases of connection failures.

Allows the user to store RDF triples in a SPARQL endpoint using the SPARQL Graph Store HTTP Protocol.

Loader, allows the user to store RDF triples in a SPARQL endpoint using the SPARQL 1.1 Graph Store HTTP Protocol.

- Repository

- Selection of the target repository type. This is due to different behavior among implementations

- Graph Store protocol endpoint

- URL of the graph store protocol endpoint

- Target graph IRI

- IRI of the RDF graph to which the input data will be loaded

- Clear target graph before loading

- When checked, replaces the target graph by the loaded data

- Log graph size

- When checked, the target graph size before and after the load is logged

- User name

- User name to be used to log in to the SPARQL endpoint

- Password

- Password to be used to log in to the SPARQL endpoint

Loads DCAT-AP v1.1 metadata to the datahub.io CKAN, from which the LOD cloud diagram is generated.

Loader, loads DCAT-AP v1.1 metadata to the datahub.io CKAN, from which the LOD cloud diagram is generated. Helps you with all the additional requried metadata.

- Datahub.io API Key

- The API key allowing write access to the datahub.io CKAN catalog. This can be found on the user detail page.

- CKAN dataset ID

- The CKAN dataset ID to be used for the current dataset

- CKAN owner organization ID

- ID of your datahub.io CKAN organization, e.g.

9046f134-ea81-462f-aae3-69854d34fc96 - CKAN license ID

- ID of the CKAN license, which is different from the license URL specified in DCAT-AP metadata

- Additional metadata

- Follows the additional requried metadata document

Loader, allows the user to to load documents into Apache Solr.

- URL of Solr instance

- URL of the Solr server, e.g.

http://localhost:8983/solr - Name of Solr Core

- Name of the Solr Core to write to

- Clear target core

- Determines whether the Solr core is cleared before loading

- Use authentication

- Use for Solr requiring user authentication

- User name

- User name for Solr

- Password

- Password for Solr

Allows the user to store RDF triples in a SPARQL endpoint using SPARQL Update.

Loader, allows the user to store RDF triples in a SPARQL endpoint using SPARQL 1.1 Update.

- SPARQL Update endpoint

- URL of the SPARQL endpoint accepting SPARQL 1.1 Update queries

- Target graph IRI

- IRI of the RDF graph to which the input data will be loaded

- Clear target graph before loading

- When checked, executes SPARQL CLEAR GRAPH on the target graph before loading

- Commit size

- Number of triples sent to triple store at once using the RDF4J RepositoryConnection

- User name

- User name to be used to log in to the SPARQL endpoint

- Password

- Password to be used to log in to the SPARQL endpoint

Allows the user to store chunked RDF data in a SPARQL endpoint using SPARQL Update.

Loader, allows the user to store chunked RDF data in a SPARQL endpoint using SPARQL 1.1 Update.

- SPARQL Update endpoint

- URL of the SPARQL endpoint accepting SPARQL 1.1 Update queries

- Target graph IRI

- IRI of the RDF graph to which the input data will be loaded

- Clear target graph before loading

- When checked, executes SPARQL CLEAR GRAPH on the target graph before loading

- User name

- User name to be used to log in to the SPARQL endpoint

- Password

- Password to be used to log in to the SPARQL endpoint

Loader, allows the user to load RDF data using the Wikibase RDF Dump Format to a Wikibase instance. There is a whole tutorial on Loading data to Wikibase available.

- Wikibase API Endpoint URL (api.php)

-

This is the URL of the

api.phpin the target Wikibase instance. For examplehttps://www.wikidata.org/w/api.php - Wikibase Query Service SPARQL Endpoint URL

-

This is the URL of the SPARQL Endpoint of the Wikibase Query Service containing data from the target Wikibase instance.

For example

https://query.wikidata.org/sparql - Any existing property from the target Wikibase instance

- e.g. P1921 for Wikidata. This is necessary, so that the used library is able to determine data types of properties used in the Wikibase.

- Wikibase ontology IRI prefix

-

This is the common part of the IRI prefixes used by the target Wikibase instance.

For example

http://www.wikidata.org/. This can be determined by looking at an RDF representation of the Q1 entity in Wikidata, specifically thewd:and similar prefixes. - User name

- User name for the Wikibase instance.

- Password

- Password for the Wikibase instance.

- Average time per edit in ms

- This is used by the wrapped Wikidata Toolkit to pace the API calls.

- Use strict matching (string-based) for value matching

-

Wikibase distinguishes among various string representations of the same number, e.g.

1and01, whereas in RDF, all those representations are considered equivalent and interchangable. When enabled, the textual representations are considered different, which may lead to unnecessary duplicates. - Skip on error

- When a Wikibase API call fails and this is enabled, the component continues its execution. Errors are logged in the Report output.

- Retry count

-

Number of retries in case of

IOException, i.e. not for all errors. - Retry pause

- Time between individual requests in case of failure.

- Create item message

- MediaWiki edit summary message when creating items.

- Update item message

- MediaWiki edit summary message when updating items.

Checks data with a SPARQL ask query and stops pipeline execution on success or fail.

Quality assurance component, Checks data with a ASK query and stops pipeline execution on success or fail.

- Fail on ASK success

- When checked, the component will stop pipeline execution, when the ASK query returns

true, when unchecked, the component will stop pipeline execution, when the ASK query returnsfalse - SPARQL ASK query

- Query for the data check

Transformer, allows the user to automatically translate literals using the Bing machine translation.

- Subscription key

- This is the subscription key needed to access the Bing translator API

- Default source language

- The component takes input language from the input literal language tags. The default source language will be used for literals without language tags.

- Target languages

- Languages to which will the input literals be translated

- Indicate machine translation in language tag

- When switched on, the machine translation will be indicated in the target literal language tag using BCP47 extension T like this:

"english string"@en-t-cs-t0-binginstead of simple"english string"@en

Transforms the RDF Chunked data unit into an RDF single graph data unit.

Transformer, allows the user to transform the RDF Chunked data unit into an RDF single graph data unit.

Transformer, allows the user to transform Microsoft Excel files to CSV files.

- Output file name template

- File name template under which the output CSV file will be visible in the pipeline, including extension. You can use

{FILE}and{SHEET}placeholders to generate multiple CSV files from one input file - Sheet filter

- Java regular expression matching Excel sheet names to process. If empty, all sheets are processed

- Row start

- Number of the first row to be transformed

- Row end

- Number of the last row to be transformed

- Column start

- Number of the first column to be transformed

- Column end

- Number of the last column to be transformed

- Virtual columns with header

- Adds

sheet_namecolumn header as this is currently the only supported virtual column - Determine type (date) for numeric cells

- Excel stores dates and integers as double values. When checked, the transformer tries to parse these as dates and integers

- Skip empty rows

- When checked, empty rows are not included in the output CSV. Otherwise, they are full of NULL values

- Add sheet name as column

- When checked, a

sheet_namecolumn is added, with source Excel sheet name as a value - Evaluate formulas

- When checked, formulas in Excel are evaluated

Transformer, which computes SHA-1 hash for each input file. The hash can be verified e.g. by the online calculator.

Transformer, decodes the input files using the base64 algorithm.

Transformer, allows the user to filter files by file name using Java regular expressions.

- File name filter pattern

- Regular expression to filter file names by

Transformer, allows the user to rename files by using Java regular expressions.

- Full file name RegExp to match

- Regular expression to match file names

- Replace RegExp with with

- Regular expression to replace the file names

Transformer, allows the user to convert RDF files (Files Data Unit) to RDF data (RDF data unit).

- Commit size

- Number of triples processed before commiting to database

- Format

- RDF serialization of the input file. Can be determined by file extension.

Converts RDF files to RDF data in a chunked fashion, to be further processed in smaller pieces.

Transformer, allows the user to convert RDF files (Files Data Unit) to RDF data in chunks, to be processed in smaller pieces.

- Format

- RDF serialization of the input file. Can be determined by file extension.

- Files per chunk

- Determines, how many input files should be stored in one output chunk

Transformer, allows the user to convert RDF files (Files Data Unit) to RDF data in single graph.

- Commit size

- Number of triples processed before commiting to database

- Format

- RDF serialization of the input file. Can be determined by file extension.

- Skip file on failure

- When a file cannot be loaded, skip it instead of failing

Transformer, inserts contents of input files to RDF triple objects.

- Predicate

- The RDF triple predicate used in the triples containing the file contents as objects. The subjects are RDF blank nodes

Transformer, provides geographical projection transformation features using the GeoTools library.

- Input resource type

- IRI of the type of entities containing the geocoordinates, e.g.

http://www.opengis.net/ont/gml#Point - Predicate for coordinates

- IRI of the predicate connecting the entity of the input resource type to the literal containing the geocoordinates, e.g. the

http://www.opengis.net/ont/gml#posproperty. The geocoordinates format is"X Y", i.e. twoxsd:doublenumbers separated by a space. - Predicate for coordinate reference system

- IRI of the predicate connecting the entity containing geocoordinates to a literal containing the source coordinates reference system, e.g.

http://www.opengis.net/ont/gml#srsName - Default coordinate reference system

- Coordinates reference system to be used with entities, which do not specify the coordinates reference system themselves, e.g.

EPSG:5514 - Predicate for linking to newly created points

- The new entity containing the transformed geocoordinates will be connected to the original entity using this predicate

- Output coordinate reference system

- Target coordinates type, e.g.

EPSG:4326 - Fail on error

- Stops execution on error when enabled, continues execution when disabled.

Transforms Data Unit containing multiple graphs to a Data Unit containing single graph.

Transformer. There are two types of RDF Data Units in LinkedPipes ETL right now. There is a data unit, that can contain multiple RDF named graphs, which is currently produced only by the Files to RDF, which may transform files containing RDF quads. When the user then wants to work with such data using components that only support triples, Graph merger is needed to do the conversion.

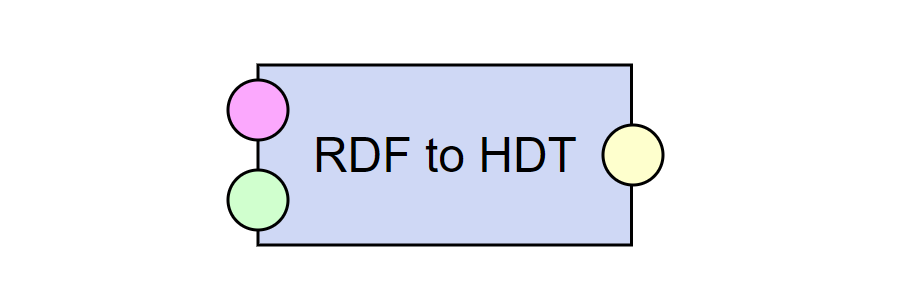

Transformer, allows the user to convert an HDT file (Files Data Unit) to RDF data (RDF data unit).

- Commit size

- Number of triples processed before commiting to database

Transformer, enables users to parse a HTML pages using jsoup CSS like selectors.

- Class of root subject

- IRI of the root output resource type

- Default has predicate

- Default predicate to be used to connect nested objects

- Generate source info

- Adds information about the source file into the output data

Transformer, performs JSON-LD operations on files formats using a JSON-LD processor.

- Operation

- Operation to be performed on input JSON-LD files

- JSON-LD context (where applicable)

- For

FlattenandCompactoperations, a new JSON-LD context can be specified

Transformer, adds a specified JSON-LD context and additional provenance data to input JSON files, making them JSON-LD.

- Encoding

- Encoding of the input files

- Data predicate

- IRI of the predicate connecting the root entity to the object representing the original JSON data interpreted as JSON-LD.

- Root entity type

- IRI of the type of the newly created root entity.

- Add file reference

- If checked, input file name is added to the output JSON-LD file using the File name predicate.

- File name predicate

- IRI of the predicate used to attach the input file name to the newly created root entity in the output JSON-LD file.

- Context object

- JSON object specifying the JSON-LD context.

Transformer, allows the user to add or subtract days from a date represented as xsd:date literal.

- Input predicate

- The predicate used in triples, where the object is the date to be shifted.

- Shift date by

- Number of days to shift the date by. Can be a positive and negative integer.

- Output predicate

- The predicate to be used in output triples to store the shifted date.

Transformer, generates text files using the {{ mustache }} template.

- Entity class IRI

- IRI of the RDF class of the objects, to which the {{ mustache }} template is applied.

- Add the

mustache:firstpredicate to first items of input lists - First items in input lists will have the

mustache:firstpredicate added. This is useful for instance for generating JSON arrays, where we do not want to generate a comma after the last item of the array. This predicate can be used for this (we do not generate the comma before the first item). - Mustache template

- The {{ mustache }} template

Generates text files using the {{ mustache }} template. Works with chunked RDF data.

Transformer, generates text files using the {{ mustache }} template and works with chunked RDF data.

Transformer, allows the user to zip input RDF files (Files Data Unit) to an output zip archive.

- Output zip file name

- File name under which the file will be visible in the pipeline, including extension.

Transformer, allows the user to convert RDF data (RDF data unit) to an RDF file (Files Data Unit).

- Output file name

- File name under which the file will be visible in the pipeline, including extension.

- Output format

- RDF serialization of the output file.

- IRI of the output graph

- IRI of the only named graph that will be created from the input triples. Valid only for the RDF Quad formats.

Transformer, allows the user to convert chunked RDF data to an RDF file.

- Output file name

- File name under which the file will be visible in the pipeline, including extension.

- Output format

- RDF serialization of the output file.

- IRI of the output graph

- IRI of the only named graph that will be created from the input triples. Valid only for the RDF Quad formats.

- Used prefixes as Turtle

- Allows the user to insert prefixes declaration in Turtle format to be used in Turtle file output.

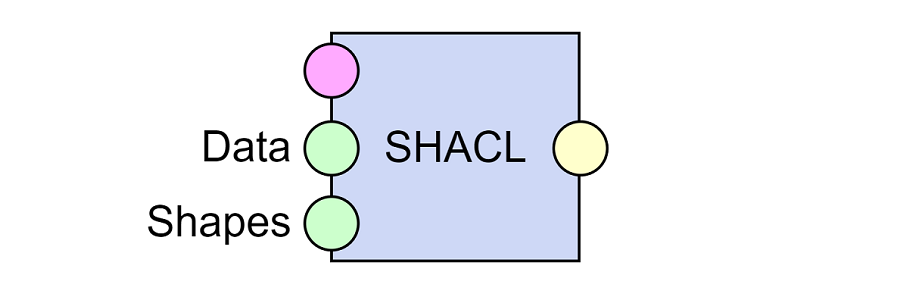

Transformer, allows the user to validate input RDF data using SHACL shapes.

- Fail on rule violation

- When on and a shape validation fails, the whole component, and therefore the pipeline, fails. When off, the failures are in the output report.

- Shapes to apply (in Turtle)

- An alternative way of providing the shapes graph. This is merged into the Shapes input.

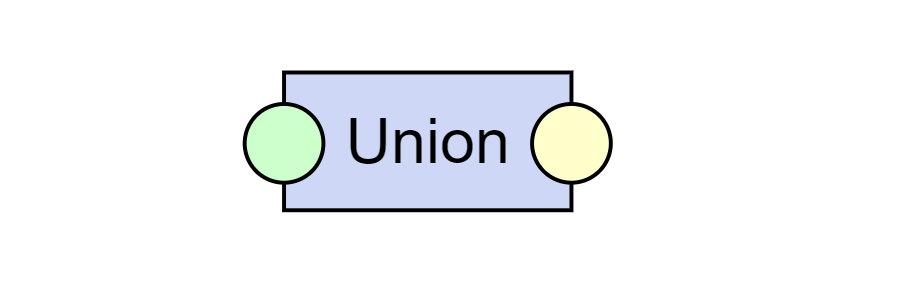

Transformer, represents a union of RDF data. Useful to make nicer pipelines where multiple edges would have to be duplicated otherwise.

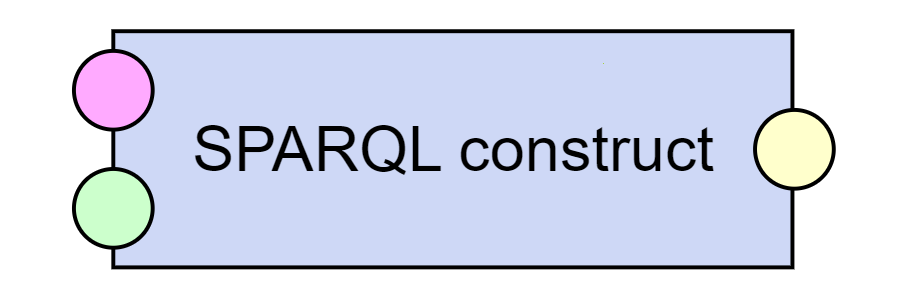

Transformer, allows the user to transform RDF data using a CONSTRUCT query. This means that based on the input data, output data will be generated using this query.

- SPARQL CONSTRUCT query

- Query for transformation of triples

Transformer, allows the user to transform chunked RDF data using a CONSTRUCT query. This means that based on the input data, output data will be generated using this query, preserving the data chunking.

- SPARQL CONSTRUCT query

- Query for transformation of triples

Creates multiple RDF files, possibly containing multiple named graphs, using a set of SPARQL queries.

Transformer, allows the user to create multiple RDF files including multiple RDF named graphs using a set of SPARQL CONSTRUCT queries.

- Use deduplication

- Individual SPARQL query results may contain duplicate triples or quads. This is a result of the way the SPARQL queries are evaluated. For smaller files this causes no issues as the duplicates are correctly resolved when the resulting file is further processed. For larger files, it is possible to turn on deduplication, which processes the results when they are serialized. This causes a slight performance penalty.

Transforms chunked RDF data combined with a smaller reference dataset using a SPARQL CONSTRUCT query.

Transformer, allows the user to transform chunked RDF data in combination with a smaller reference dataset using a CONSTRUCT query. This means that based on the input data and the reference data, output data will be generated using this query, preserving the data chunking.

- SPARQL CONSTRUCT query

- Query for transformation of triples

Transformer, allows the user to transform RDF data to a CSV file using a SELECT query.

- SPARQL SELECT query

- Query for transformation of triples

Transforms RDF data into multiple CSV files using multiple SPARQL SELECT queries.

Transformer, allows the user to transform RDF data into multiple CSV files using multiple SPARQL SELECT queries.

Transformer, allows the user to transform RDF data using SPARQL UPDATE queries. This means that the input data will be changed - added to and/or deleted from.

- SPARQL Update query

- Query for transformation of triples

Transformer, allows the user to transform chunked RDF data using SPARQL UPDATE queries. This means that the input data will be changed - added to and/or deleted from, preserving the data chunking.

- SPARQL Update query

- Query for transformation of triples

Transformer, compresses input files using gzip or bzip2.

- Format

- Output compression format. Can be gzip or bzip2.

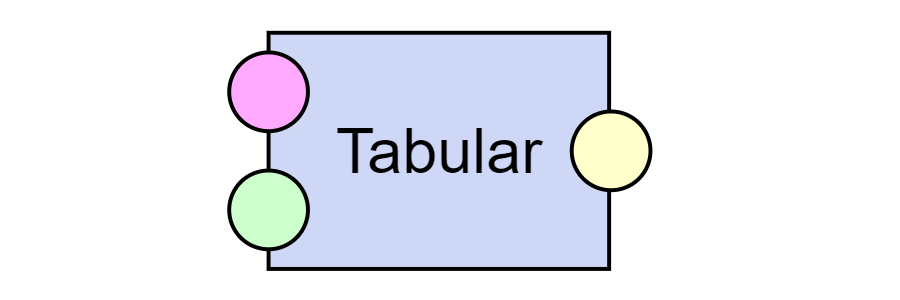

Transformer. Used to transform CSV files to RDF data according to the Generating RDF from Tabular Data on the Web W3C Recommendation. The first set of parameters is there simply do deal with CSV files not compliant with RFC 4180, which specifies a comma as a delimiter, the double-quote as a quote character, and UTF-8 as the encoding.

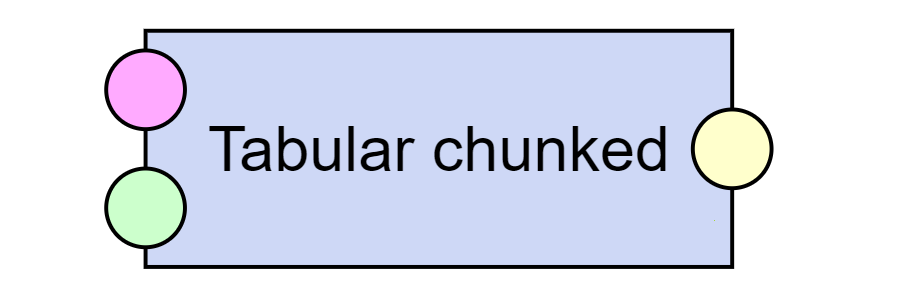

Transformer. Used to transform CSV files to RDF data chunks according to the Generating RDF from Tabular Data on the Web W3C Recommendation. This version of the Tabular component produces chunked RDF data for processing of a larger number of independent entities with lower memory consumption.

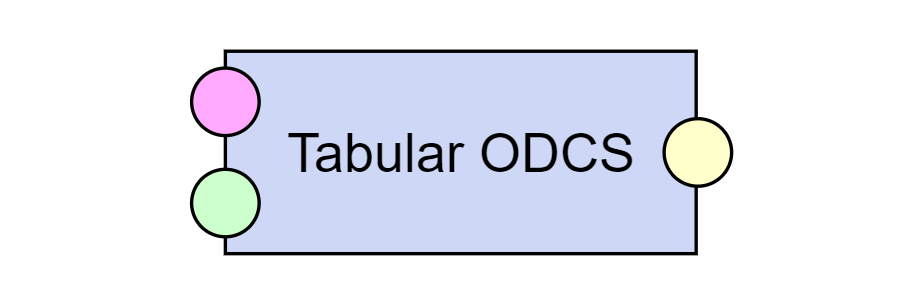

Transformer. Used to transform CSV files to RDF data. It is present only to allow smoother transition from UnifiedViews using the uv2etl tool. In all other cases, the CSV on the Web compatible Tabular component should be used instead.

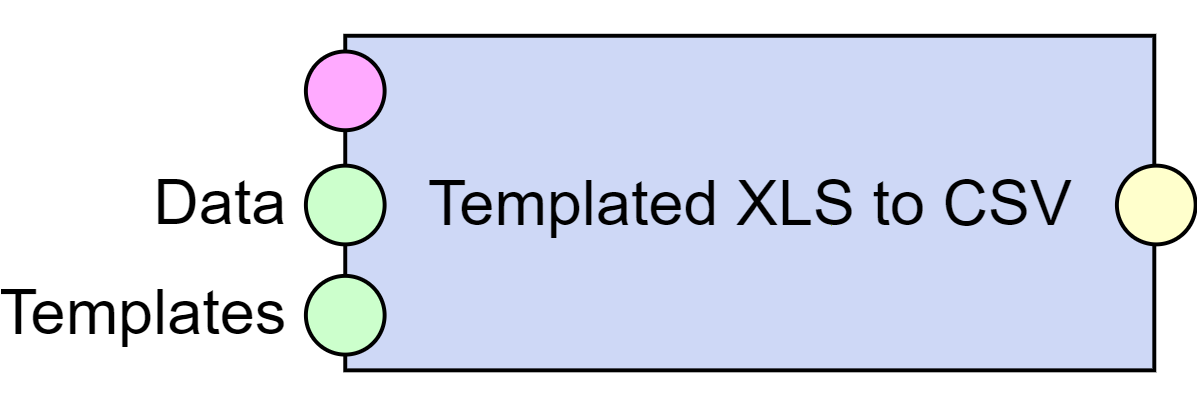

Transforms Excel files to CSV files using a template, which helps with print formatted files.

Transformer, allows the user to transform Microsoft Excel XLS (not XLSX) files to CSV files using a template, which helps with print formatted files.

- Template file prefix

- Prefix used by template files. The part of the template file name after the prefix should match the original file name.

Transformer, allows the user to unpack input zip, bzip2, gzip and 7z files.

- Unpack each file into separated directory

- When checked, a directory will be created for each input file. Otherwise, all files will be decompressed into a single directory

- Format

- Input archive format. Can be zip, bzip2, gzip, 7z or auto, which means the format will be determined by the file extension

- Skip file on error

- When an error occurs with a file when processing multiple files, continue execution

Transformer, allows the user to unzip input zip files.

- Unpack each zip into separated directory

- When checked, a directory will be created for each input zip file. Otherwise, all files will be decompressed into a single directory

Transformer, allows the user to parse lists and other structured texts from a literal, creating multiple literals.

- Predicate for input values

- IRI of the predicate linking to the literal to be processed

- Regular expression with groups

-

Java regular expression with named groups to match the items of the structured input text, e.g.

(?<g>[^\[\],]+) - Preserve language tag/datatype

- Sets the language tag or datatype of the input literal on the output literals

- Group name

- Name of the regular expression group to store as a new literal

- Group predicate

- IRI of the predicate linking to the output literals created from this regular expression group

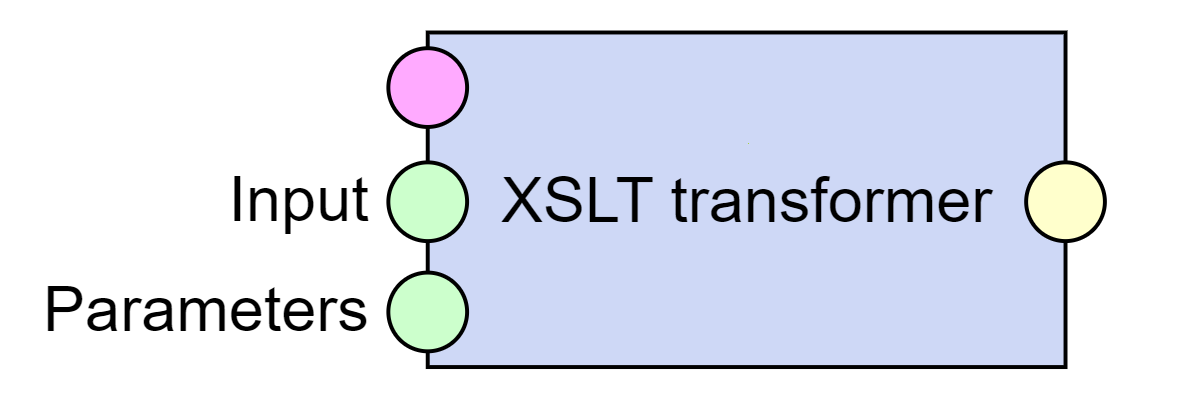

Transformer, allows the user to transform XML files using XSLT.

- New file extension

- XSLT allows to create arbitrary text files from XML. Expecially for RDF files it is important that the new file has a correct extension according to its format

- Number of threads used for transformation

- The XSLT processor is given this number of threads to use for each transformation

- XSLT Template

- The XSLT template used to transform the input XML files

Special purpose component, allows the user to delete a directory on a local file system.

- Directory to delete

- Directory to delete

Uses the SPARQL 1.1 Graph Store HTTP Protocol to remove a list of named graphs from an endpoint.

Special purpose component, allows the user to remove a list of named graphs from a SPARQL 1.1 Graph Store HTTP Protocol endpoint.

- Graph store protocol endpoint

- URL of the SPARQL 1.1 Graph Store HTTP Protocol endpoint, e.g.

http://localhost:8890/sparql-graph-crud-auth - Use authentication

- Use for endpoints requiring user authentication

- User name

- User name for the endpoint

- Password

- Password for the endpoint

Special purpose component, issues a set of arbitrary HTTP requests according to configuration.

- Number of threads used for execution

- Number of threads used for execution of the individual tasks. Network operations tend to be slow, so it is advantageous to process them in parallel.

- Threads per group

- For cases where there are multiple tasks aimed e.g. at the same host, this sets the number of threads to be used for a single group (e.g. a single host) as specified in the input tasks.

- Skip on error

- When an HTTP or another error is encountered in one of the tasks, this switch controls whether the execution of the whole pipeline should fail, or whether this is expected and therefore the execution should continue.

- Follow redirects

- Controls whether the component should follow HTTP 30x redirects.

- Encode input URL

- Percent encodes input URLs before using them as URIs in HTTP. Useful for cases where the target web server does not handle IRIs well of the input URLs may contain invalid characters.

Special purpose component, allows the user to execute LP-ETL pipelines.

- URL of LinkedPipes instance

- The instance on which a pipeline should be executed

- Pipeline IRI

- IRI of the pipeline to be executed

- Save debug data

- Determines, whether outputs and inputs of all components are saved or not. Choosing not to save them might increase execution performance, but in case of failures, debug data will not be available.

- Delete working data, including saved debug data, after successful execution

- On successful execution of a pipeline, debug data and logs can be deleted, to save space.

- Execution log level

- Determines the log level of the pipeline execution.

Special purpose component, allows the user to instruct OpenLink Virtuoso triplestore to load a file from the remote file system. Uses Virtuoso Bulk Loader functionality.

- Virtuoso JDBC connection string

- JDBC database connection string to connect to the Virtuoso iSQL port, e.g.

jdbc:virtuoso://localhost:1111/charset=UTF-8/ - Virtuoso user name

- User name to Virtuoso (default in Virtuoso is

dba) - Virtuoso password

- Password to Virtuoso. Note that this is plain text and in no way secured. (default in Virtuoso is

dba) - Remote directory with source files

- Directory on the remote file system (local to Virtuoso) where the files to be loaded are located

- Filename pattern to load

- A filename pattern of files to load. This can be either a single file like

data.ttlor a pattern such asdata*.ttl - Target graph IRI

- Target RDF graph for input triple files and default graph in quad files

- Clear target graph before loading

- When checked, executes SPARQL CLEAR GRAPH on the target graph before loading

- Execute checkpoint

- When checked, executes the

checkpoint;command after loading. Otherwise, checkpoint will be executed as configured in the Virtuoso instance. If that instance crashes beforecheckpoint;is executed, the loaded data will be lost. - Number of loaders to use

- Virtuoso can load multiple files in parallel. This specifies how many and it should correspond to the available number of cores on the server.

- Status update interval in seconds

- Interval for polling Virtuoso for load status update

Special purpose component, allows the user to instruct OpenLink Virtuoso triplestore to dump a named graph to the remote file system. Uses Virtuoso Bulk Loader functionality.

- Virtuoso JDBC connection string

- JDBC database connection string to connect to the Virtuoso iSQL port (typically

1111) - User name

- User name to Virtuoso

- Password

- Password to Virtuoso. Note that this is plain text and in no way secured

- Source graph IRI

- IRI of the RDF graph to be dumped

- Output path

- Directory on the remote file system (local to Virtuoso) where the files will be dumped